Neurotechnology Research

Pioneering brain-computer interfaces and neural signal processing technologies that transform how we interact with machines.

Stress Detection EEG Applied to Poker

Building on our previous emotion detection research, this project focuses on stress detection during poker gameplay using a redesigned discrete EEG headset. The system has been completely concealed within a baseball cap for unobtrusive monitoring during competitive gaming scenarios.

Discrete EEG System Development

Signal processing hardware and electrode interface components before cap integration

Initial EEG data collection for cognitive state classification

Real-world testing during poker gameplay with EEG monitoring and data collection

Multiple discrete EEG headsets in various stages of assembly and testing for multiple poker players

Interior view showing fully integrated electrode array and signal processing electronics

Conference presentation displaying real-time biofeedback dashboard with cognitive state monitoring

Technical Improvements

- Complete concealment within baseball cap

- Miniaturized signal processing electronics

- Real-time stress level monitoring

- Wireless data transmission capabilities

Research Applications

This discrete system enables unobtrusive monitoring of cognitive load and stress responses during competitive gaming, with potential applications in sports psychology, performance optimization, and behavioral research in naturalistic settings.

Project Documentation

Project Presentation

Poker stress detection research slideshow and methodology

Emotion Detection with EEG

Research focused on developing wearable EEG systems capable of real-time emotion recognition. This work has applications in mental health monitoring, human-computer interaction, and adaptive user interfaces.

Development Process & Hardware

Branding: customized cap with CruX logo

3D CAD modeling of the EEG electrode casings: developed such that gel electrodes can be removed and cleaned

Electrode integration: electrode casings were 3D printed with flexible TPU for user comfort

Completed discrete EEG headset: worn by one of our project presenters

Research Areas

- Signal acquisition and preprocessing

- Feature extraction from neural oscillations

- Deep learning classification models

- Wearable device integration

Applications

Creating systems that can monitor emotional states in real-time, enabling personalized interventions and adaptive technologies that respond to user emotional needs.

Project Documentation

Project Presentation

Complete project slideshow and results

California Neurotechnology Conference

Our emotion detection EEG project was presented at the California Neurotechnology Conference in a competitive research presentation. The project won first place, earning recognition for innovation in wearable neurotechnology and real-time emotion recognition systems.

Award: First Place Winner - Neuroscience and Neurotechnology Primer with Queen's University

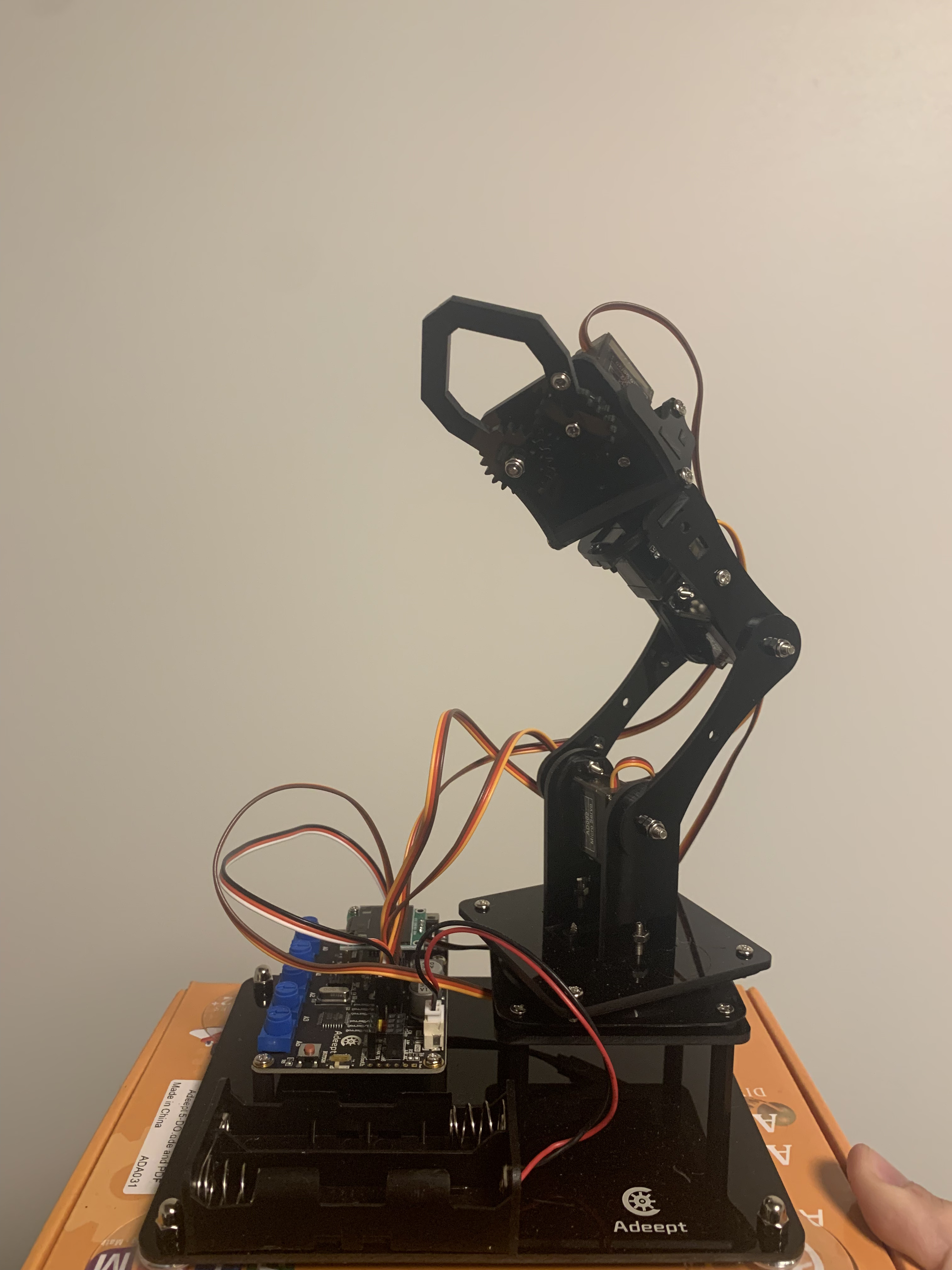

CruX - Brain-Controlled Pincher

Developing an innovative brain-controlled prosthetic pincher that translates neural signals into precise mechanical movements. This type of research would represent a significant advancement in assistive technology for individuals with motor impairments.

Key Features

- Real-time EEG signal processing

- Machine learning-based intent recognition

- Precise motor control algorithms

- User-adaptive calibration system

Technical Approach

Utilizing advanced signal processing techniques combined with machine learning algorithms to decode motor intentions from neural activity, enabling intuitive control of prosthetic devices.

Outcome

Our team was partially successful in developing the brain-controlled interface. While we achieved significant progress in EEG signal processing and mechanical control, we encountered challenges with motor imagery classification that prevented full implementation of the intended brain-control functionality. This experience provided valuable insights into the complexities of brain-computer interface development.

Hardware Implementation

Physical implementation with Arduino control board and multi-jointed articulated arm